Stock market fluctuations¶

The fluctuations of stock prices represent an intriguing example of a complex random walk. Stock prices are influenced by transactions that are carried out over a broad range of time scales, from micro- to milliseconds for high-frequency hedge funds over several hours or days for day-traders to months or years for long-term investors. We therefore expect that the statistical properties of stock price fluctuations, like volatility and auto-correlation of returns, are not constant over time, but show significant fluctuations of their own. Time-varying parameter models can account for such changes in volatility and auto-correlation and update their parameter estimates in real-time.

There are, however, certain events that render previously gathered information about volatility or autocorrelation of returns completely useless. News announcements that are unexpected at least to some extent, for example, can induce increased trading activity, as market participants update their orders according to their interpretation of novel information. The resulting “non-scheduled” price corrections can often not be adequately described by the current estimates of volatility. Even a model that accounts for gradual variations in volatility cannot reproduce these large price corrections. Instead, when such an event happens, it becomes favorable to forget about previously gathered parameter estimates and completely start over. In this example, we use the bayesloop framework to specifically evaluate the probability of previously acquired information becoming useless. We interpret this probability value as a risk metric and evaluate it for each minute of an individual trading day (Nov 28, 2016) for the exchange-traded fund SPY. The announcement of macroeconomic indicators on this day results in a significant increase of our risk metric in intra-day trading.

DISCLAIMER: This website does not provide tax, legal or accounting advice. This material has been prepared for informational purposes only, and is not intended to provide, and should not be relied on for, tax, legal or accounting advice. You should consult your own tax, legal and accounting advisors before engaging in any transaction.

Note: The intraday pricing data used in this example is obtained via Google Finance:

https://www.google.com/finance/getprices?q=SPY&i=60&p=1d&f=d,c

This request returns a list of minute close prices (and date/time

information; f=d,c) for SPY for the last trading period. The maximum

look-back period is 14 days (p=14d) for requests of minute-scale

data (i=60).

In [1]:

%matplotlib inline

import numpy as np

import bayesloop as bl

import sympy.stats as stats

from tqdm import tqdm_notebook

import matplotlib.pyplot as plt

import seaborn as sns

sns.set_color_codes() # use seaborn colors

# minute-scale pricing data

prices = np.array(

[ 221.14 , 221.09 , 221.17 , 221.3 , 221.3 , 221.26 ,

221.32 , 221.17 , 221.2 , 221.27 , 221.19 , 221.12 ,

221.08 , 221.1 , 221.075, 221.03 , 221.04 , 221.03 ,

221.11 , 221.14 , 221.135, 221.13 , 221.04 , 221.15 ,

221.21 , 221.25 , 221.21 , 221.17 , 221.21 , 221.2 ,

221.21 , 221.17 , 221.1 , 221.13 , 221.18 , 221.15 ,

221.2 , 221.2 , 221.23 , 221.25 , 221.25 , 221.25 ,

221.25 , 221.22 , 221.2 , 221.15 , 221.18 , 221.13 ,

221.1 , 221.08 , 221.13 , 221.09 , 221.08 , 221.07 ,

221.09 , 221.1 , 221.06 , 221.1 , 221.11 , 221.18 ,

221.26 , 221.46 , 221.38 , 221.35 , 221.3 , 221.18 ,

221.18 , 221.18 , 221.17 , 221.175, 221.13 , 221.03 ,

220.99 , 220.97 , 220.9 , 220.885, 220.9 , 220.91 ,

220.94 , 220.935, 220.84 , 220.86 , 220.89 , 220.91 ,

220.89 , 220.84 , 220.83 , 220.74 , 220.755, 220.72 ,

220.69 , 220.72 , 220.79 , 220.79 , 220.81 , 220.82 ,

220.8 , 220.74 , 220.75 , 220.73 , 220.69 , 220.72 ,

220.73 , 220.69 , 220.71 , 220.72 , 220.8 , 220.81 ,

220.79 , 220.8 , 220.79 , 220.74 , 220.77 , 220.79 ,

220.87 , 220.86 , 220.92 , 220.92 , 220.88 , 220.87 ,

220.88 , 220.87 , 220.94 , 220.93 , 220.92 , 220.94 ,

220.94 , 220.9 , 220.94 , 220.9 , 220.91 , 220.85 ,

220.85 , 220.83 , 220.85 , 220.84 , 220.87 , 220.91 ,

220.85 , 220.77 , 220.83 , 220.79 , 220.78 , 220.78 ,

220.79 , 220.83 , 220.87 , 220.88 , 220.9 , 220.97 ,

221.05 , 221.02 , 221.01 , 220.99 , 221.04 , 221.05 ,

221.06 , 221.07 , 221.12 , 221.06 , 221.07 , 221.03 ,

221.01 , 221.03 , 221.03 , 221.01 , 221.02 , 221.04 ,

221.04 , 221.07 , 221.105, 221.1 , 221.09 , 221.08 ,

221.07 , 221.08 , 221.03 , 221.06 , 221.1 , 221.11 ,

221.11 , 221.18 , 221.2 , 221.34 , 221.29 , 221.235,

221.22 , 221.2 , 221.21 , 221.22 , 221.19 , 221.17 ,

221.19 , 221.13 , 221.13 , 221.12 , 221.14 , 221.11 ,

221.165, 221.19 , 221.18 , 221.19 , 221.18 , 221.15 ,

221.16 , 221.155, 221.185, 221.19 , 221.2 , 221.2 ,

221.16 , 221.18 , 221.16 , 221.11 , 221.07 , 221.095,

221.08 , 221.08 , 221.09 , 221.11 , 221.08 , 221.08 ,

221.1 , 221.08 , 221.11 , 221.07 , 221.11 , 221.1 ,

221.09 , 221.07 , 221.14 , 221.12 , 221.08 , 221.09 ,

221.05 , 221.08 , 221.065, 221.05 , 221.06 , 221.085,

221.095, 221.07 , 221.05 , 221.09 , 221.1 , 221.145,

221.12 , 221.14 , 221.12 , 221.12 , 221.12 , 221.11 ,

221.14 , 221.15 , 221.13 , 221.12 , 221.11 , 221.105,

221.105, 221.13 , 221.14 , 221.1 , 221.105, 221.105,

221.11 , 221.13 , 221.15 , 221.11 , 221.13 , 221.08 ,

221.11 , 221.12 , 221.12 , 221.12 , 221.13 , 221.15 ,

221.18 , 221.21 , 221.18 , 221.15 , 221.15 , 221.15 ,

221.15 , 221.15 , 221.13 , 221.13 , 221.16 , 221.13 ,

221.11 , 221.12 , 221.09 , 221.07 , 221.06 , 221.04 ,

221.06 , 221.09 , 221.07 , 221.045, 221. , 220.99 ,

220.985, 220.95 , 221. , 221.01 , 221.005, 220.99 ,

221.03 , 221.055, 221.06 , 221.03 , 221.03 , 221.03 ,

221. , 220.95 , 220.96 , 220.97 , 220.965, 220.97 ,

220.94 , 220.93 , 220.9 , 220.9 , 220.9 , 220.91 ,

220.94 , 220.92 , 220.94 , 220.91 , 220.92 , 220.935,

220.875, 220.89 , 220.91 , 220.92 , 220.93 , 220.93 ,

220.91 , 220.9 , 220.89 , 220.9 , 220.9 , 220.93 ,

220.94 , 220.92 , 220.93 , 220.88 , 220.88 , 220.86 ,

220.9 , 220.92 , 220.85 , 220.83 , 220.83 , 220.795,

220.81 , 220.78 , 220.7 , 220.69 , 220.6 , 220.58 ,

220.61 , 220.63 , 220.68 , 220.63 , 220.63 , 220.595,

220.66 , 220.645, 220.64 , 220.6 , 220.579, 220.53 ,

220.53 , 220.5 , 220.42 , 220.49 , 220.49 , 220.5 ,

220.475, 220.405, 220.4 , 220.425, 220.385, 220.37 ,

220.49 , 220.46 , 220.45 , 220.48 , 220.51 , 220.48 ]

)

plt.figure(figsize=(8,2))

plt.plot(prices)

plt.ylabel('price [USD]')

plt.xlabel('Nov 28, 2016')

plt.xticks([30, 90, 150, 210, 270, 330, 390],

['10am', '11am', '12pm', '1pm', '2pm', '3pm', '4pm'])

plt.xlim([0, 390]);

Persistent random walk model¶

In this example, we choose to model the price evolution of SPY as a simple, well-known random walk model: the auto-regressive process of first order. We assume that subsequent log-return values \(r_t\) of SPY obey the following recursive instruction:

with the time-varying correlation coefficient \(\rho_t\) and the time-varying volatility parameter \(\sigma_t\). Here, \(\epsilon_t\) is drawn from a standard normal distribution and represents the driving noise of the process and the scaling factor \(\sqrt{1-\rho_t^2}\) makes sure that \(\sigma_t\) is the standard deviation of the process. In bayesloop, we define this observation model as follows:

bl.om.ScaledAR1('rho', bl.oint(-1, 1, 100), 'sigma', bl.oint(0, 0.006, 400))

This implementation of the correlated random walk model will be discussed in detail in the next section.

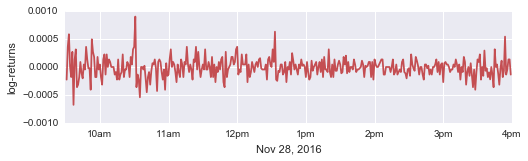

Looking at the log-returns of our example, we find that the magnitude of the fluctuations (i.e. the volatility) is higher after market open and before market close. While these variations happen quite gradually, a large peak around 10:30am (and possibly another one around 12:30pm) represents an abrupt price correction.

In [2]:

logPrices = np.log(prices)

logReturns = np.diff(logPrices)

plt.figure(figsize=(8,2))

plt.plot(np.arange(1, 390), logReturns, c='r')

plt.ylabel('log-returns')

plt.xlabel('Nov 28, 2016')

plt.xticks([30, 90, 150, 210, 270, 330, 390],

['10am', '11am', '12pm', '1pm', '2pm', '3pm', '4pm'])

plt.yticks([-0.001, -0.0005, 0, 0.0005, 0.001])

plt.xlim([0, 390]);

Online study¶

bayesloop provides the class OnlineStudy to analyze on-going data

streams and perform model selection for each new data point. In contrast

to other Study types, more than just one transition model can be

assigned to an OnlineStudy instance, using the method

addTransitionModel. Here, we choose to add two distinct scenarios:

- normal: Both volatility and correlation of subsequent returns are allowed to vary gradually over time, to account for periods with above average trading activity after market open and before market close. This scenario therefore represents a smoothly running market.

- chaotic: This scenario assumes that we know nothing about the value of volatility or correlation. The probability that this scenario gets assigned in each minute therefore represents the probability that previously gathered knowledge about market dynamics cannot explain the last price movement.

By evaluating how likely the chaotic scenario explains each new minute close price of SPY compared to the normal scenario, we can identify specific events that lead to extreme fluctuations in intra-day trading.

First, we create a new instance of the OnlineStudy class and set the

observation model introduced above. The keyword argument

storeHistory is set to True, because we want to access the

parameter estimates of all time steps afterwards, not only the estimates

of the last time step.

In [3]:

S = bl.OnlineStudy(storeHistory=True)

L = bl.om.ScaledAR1('rho', bl.oint(-1, 1, 100),

'sigma', bl.oint(0, 0.006, 400))

S.set(L)

+ Created new study.

--> Hyper-study

--> Online study

+ Observation model: Scaled autoregressive process of first order (AR1). Parameter(s): ['rho', 'sigma']

Note: While the parameter rho is naturally constrained to the

interval ]-1, 1[, the parameter boundaries of sigma have to be

specified by the user. Typically, one can review past log-return data

and estimate the upper boundary as a multiple of the standard deviation

of past data points.

Both scenarios for the dynamic market behavior are implemented via the

method add. The normal case consists of a combined transition

model that allows both volatility and correlation to perform a Gaussian

random walk. As the standard deviation (magnitude) of the parameter

fluctuations is a-priori unknown, we supply a wide range of possible

values (bl.cint(0, 1.5e-01, 15) for rho, which corresponds to 15

equally spaced values within the closed interval [0, 0.15], and 50

equally spaced values within the interval [0,

1.5$:raw-latex:cdot\(10\)^{-4}$] for sigma).

Since we have no prior assumptions about the standard deviations of the

Gaussian random walks, we let bayesloop assign equal probability to

all values. If one wants to analyze more than just one trading day, the

(hyper-)parameter distributions from the end of one day can be used as

the prior distribution for the next day! One might also want to suppress

large variations of rho or sigma with an exponential prior,

e.g.:

bl.tm.GaussianRandomWalk('s1', bl.cint(0, 1.5e-01, 15), target='rho',

prior=stats.Exponential('expon', 1./3.0e-02))

The chaotic case is implemented by the transition model

Independent. This model sets a flat prior distribution for the

parameters volatility and correlation in each time step. This way,

previous knowledge about the parameters is not used when analyzing a new

data point.

In [4]:

T1 = bl.tm.CombinedTransitionModel(

bl.tm.GaussianRandomWalk('s1', bl.cint(0, 1.5e-01, 15), target='rho'),

bl.tm.GaussianRandomWalk('s2', bl.cint(0, 1.5e-04, 50), target='sigma')

)

T2 = bl.tm.Independent()

S.add('normal', T1)

S.add('chaotic', T2)

+ Added transition model: normal (750 combination(s) of the following hyper-parameters: ['s1', 's2'])

+ Added transition model: chaotic (no hyper-parameters)

Before any data points are passed to the study instance, we further provide prior probabilities for the two scenarios. We expect about one news announcement containing unexpected information per day and set a prior probability of \(1/390\) for the chaotic scenario (one normal trading day consists of 390 trading minutes).

In [5]:

S.setTransitionModelPrior([389/390., 1/390.])

+ Set custom transition model prior.

Finally, we can supply log-return values to the study instance, data

point by data point. We use the step method to infer new parameter

estimates and the updated probabilities of the two scenarios. Note that

in this example, we use a for loop to feed all data points to the

algorithm because all data points are already available. In a real

application of the OnlineStudy class, one can supply each new data

point as it becomes available and analyze it in real-time.

In [6]:

for r in tqdm_notebook(logReturns):

S.step(r)

+ Start model fit

+ Not enough data points to start analysis. Will wait for more data.

+ Set uniform prior with parameter boundaries.

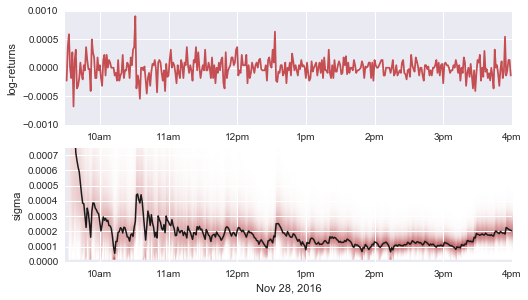

Volatility spikes¶

Before we analyze how the probability values of our two market scenarios

change over time, we check whether the inferred temporal evolution of

the time-varying parameters is realistic. Below, the log-returns are

displayed together with the inferred marginal distribution (shaded red)

and mean value (black line) of the volatility parameter, using the

method plotParameterEvolution.

In [7]:

plt.figure(figsize=(8, 4.5))

# data plot

plt.subplot(211)

plt.plot(np.arange(1, 390), logReturns, c='r')

plt.ylabel('log-returns')

plt.xticks([30, 90, 150, 210, 270, 330, 390],

['10am', '11am', '12pm', '1pm', '2pm', '3pm', '4pm'])

plt.yticks([-0.001, -0.0005, 0, 0.0005, 0.001])

plt.xlim([0, 390])

# parameter plot

plt.subplot(212)

S.plot('sigma', color='r')

plt.xticks([28, 88, 148, 208, 268, 328, 388],

['10am', '11am', '12pm', '1pm', '2pm', '3pm', '4pm'])

plt.xlabel('Nov 28, 2016')

plt.ylim([0, 0.00075])

plt.xlim([-2, 388]);

Note that the volatility estimates of the first few trading minutes are not as accurate as later ones, as we initialize the algorithm with a non-informative prior distribution. One could of course provide a custom prior distribution as a more realistic starting point. Despite this fade-in period, the period of increased volatility after market open is captured nicely, as well as the (more subtle) increase in volatility during the last 45 minutes of the trading day. Large individual log-return values also result in an volatility spikes (around 10:30am and more subtle around 12:30pm).

Islands of stability¶

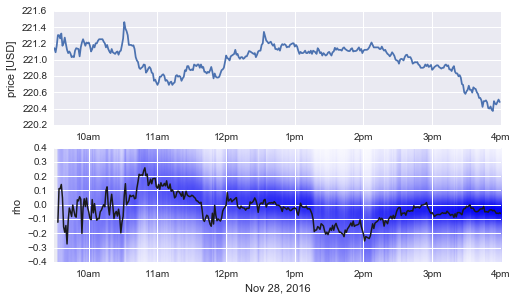

The persistent random walk model does not only provide information about the magnitude of price fluctuations, but further quantifies whether subsequent log-return values are correlated. A positive correlation coefficient indicates diverging price movements, as a price increase is more likely followed by another increase, compared to a decrease. In contrast, a negative correlation coefficient indicates islands of stability, i.e. trading periods during which prices do not diffuse randomly (as with a corr. coeff. of zero). Below, we plot the price evolution of SPY on November 28, together with the inferred marginal distribution (shaded blue) and the corresponding mean value (black line) of the time-varying correlation coefficient.

In [8]:

plt.figure(figsize=(8, 4.5))

# data plot

plt.subplot(211)

plt.plot(prices)

plt.ylabel('price [USD]')

plt.xticks([30, 90, 150, 210, 270, 330, 390],

['10am', '11am', '12pm', '1pm', '2pm', '3pm', '4pm'])

plt.xlim([0, 390])

# parameter plot

plt.subplot(212)

S.plot('rho', color='#0000FF')

plt.xticks([28, 88, 148, 208, 268, 328, 388],

['10am', '11am', '12pm', '1pm', '2pm', '3pm', '4pm'])

plt.xlabel('Nov 28, 2016')

plt.ylim([-0.4, 0.4])

plt.xlim([-2, 388]);

As a correlation coefficient that deviates significantly from zero would be immediately exploitable to predict future price movements, we mostly find correlation values near zero (in accordance with the efficient market hypothesis). However, between 1:15pm and 2:15pm, we find a short period of negative correlation with a value around -0.2. During this period, subsequent price movements tend to cancel each other out, resulting in an unusually strong price stability.

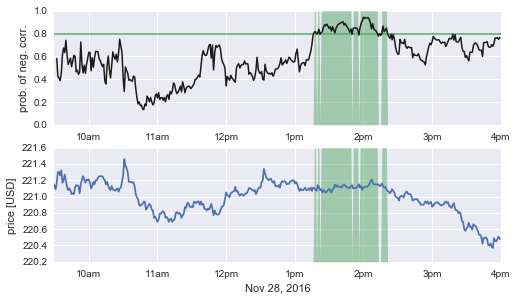

Using the Parser sub-module of bayesloop, we can evaluate the

probability that subsequent return values are negatively correlated. In

the figure below, we tag all time steps with a probability for

rho < 0 of 80% or higher and find that this indicator nicely

identifies the period of increased market stability!

Note: The arbitrary threshold of 80% for our market indicator is of course chosen with hindsight in this example. In a real application, more than one trading day of data needs to be analyzed to create robust indicators!

In [9]:

# extract parameter grid values (rho) and corresponding prob. values (p)

rho, p = S.getParameterDistributions('rho')

# evaluate Prob.(rho < 0) for all time steps

P = bl.Parser(S)

p_neg_rho = np.array([P('rho < 0.', t=t, silent=True) for t in range(1, 389)])

# plotting

plt.figure(figsize=(8, 4.5))

plt.subplot(211)

plt.axhline(y=0.8, lw=1.5, c='g')

plt.plot(p_neg_rho, lw=1.5, c='k')

plt.fill_between(np.arange(len(p_neg_rho)), 0, p_neg_rho > 0.8, lw=0, facecolor='g', alpha=0.5)

plt.xticks([28, 88, 148, 208, 268, 328, 388],

['10am', '11am', '12pm', '1pm', '2pm', '3pm', '4pm'])

plt.ylabel('prob. of neg. corr.')

plt.xlim([-2, 388])

plt.subplot(212)

plt.plot(prices)

plt.fill_between(np.arange(2, len(p_neg_rho)+2), 220.2, 220.2 + (p_neg_rho > 0.8)*1.4, lw=0, facecolor='g', alpha=0.5)

plt.ylabel('price [USD]')

plt.xticks([30, 90, 150, 210, 270, 330, 390],

['10am', '11am', '12pm', '1pm', '2pm', '3pm', '4pm'])

plt.xlim([0, 390])

plt.ylim([220.2, 221.6])

plt.xlabel('Nov 28, 2016');

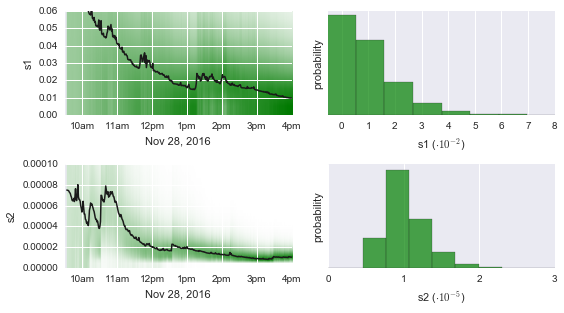

Automatic tuning¶

One major advantage of the OnlineStudy class is that it not only

infers the time-varying parameters of the low-level correlated random

walk (the observation model ScaledAR1), but further infers the

magnitude (the standard deviation of the transition model

GaussianRandomWalk) of the parameter fluctuations and thereby tunes

the parameter dynamics as new data arrives. As we can see below (left

sub-figures), both magnitudes - one for rho and one for sigma -

start off at a high level. This is due to our choice of a uniform prior,

assigning equal probability to all hyper-parameter values before seeing

any data. Over time, the algorithm learns that the true parameter

fluctuations are less severe than previously assumed and adjusts the

hyper-parameters accordingly. This newly gained information, summarized

in the hyper-parameter distributions of the last time step (right

sub-figures), could then represent the prior distribution for the next

trading day.

In [10]:

plt.figure(figsize=(8, 4.5))

plt.subplot(221)

S.plot('s1', color='green')

plt.xticks([28, 88, 148, 208, 268, 328, 388],

['10am', '11am', '12pm', '1pm', '2pm', '3pm', '4pm'])

plt.xlabel('Nov 28, 2016')

plt.xlim([-2, 388])

plt.ylim([0, 0.06])

plt.subplot(222)

S.plot('s1', t=388, facecolor='green', alpha=0.7)

plt.yticks([])

plt.xticks([0, 0.01, 0.02, 0.03, 0.04, 0.05, 0.06, 0.07, 0.08], ['0', '1', '2', '3', '4', '5', '6', '7', '8'])

plt.xlabel('s1 ($\cdot 10^{-2}$)')

plt.xlim([-0.005, 0.08])

plt.subplot(223)

S.plot('s2', color='green')

plt.xticks([28, 88, 148, 208, 268, 328, 388],

['10am', '11am', '12pm', '1pm', '2pm', '3pm', '4pm'])

plt.xlabel('Nov 28, 2016')

plt.xlim([-2, 388])

plt.ylim([0, 0.0001])

plt.subplot(224)

S.plot('s2', t=388, facecolor='green', alpha=0.7)

plt.yticks([])

plt.xticks([0, 0.00001, 0.00002, 0.00003], ['0', '1', '2', '3'])

plt.xlabel('s2 ($\cdot 10^{-5}$)')

plt.xlim([0, 0.00003])

plt.tight_layout()

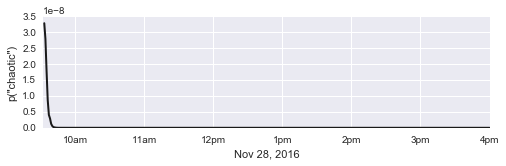

Real-time model selection¶

Finally, we investigate which of our two market scenarios - normal vs.

chaotic - can explain the price movements best. Using the method

plot('chaotic'), we obtain the probability values for the chaotic

scenario compared to the normal scenario, with respect to all past

data points:

In [11]:

plt.figure(figsize=(8, 2))

S.plot('chaotic', lw=2, c='k')

plt.xticks([28, 88, 148, 208, 268, 328, 388],

['10am', '11am', '12pm', '1pm', '2pm', '3pm', '4pm'])

plt.xlabel('Nov 28, 2016')

plt.xlim([0, 388])

plt.ylabel('p("chaotic")')

Out[11]:

<matplotlib.text.Text at 0xefc86a0>

As expected, the probability that the chaotic scenario can explain all past log-return values at a given point in time quickly falls off to practically zero. Indeed, a correlated random walk with slowly changing volatility and correlation of subsequent returns is better suited to describe the price fluctuations of SPY in the majority of time steps.

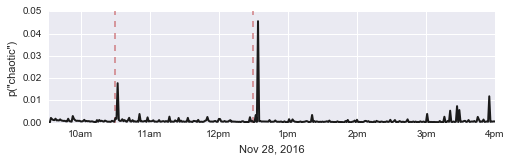

However, we may also ask for the probability that each individual

log-return value is produced by either of the two market scenarios by

using the keyword argument local=True:

In [12]:

plt.figure(figsize=(8, 2))

S.plot('chaotic', local=True, c='k', lw=2)

plt.xticks([28, 88, 148, 208, 268, 328, 388],

['10am', '11am', '12pm', '1pm', '2pm', '3pm', '4pm'])

plt.xlabel('Nov 28, 2016')

plt.xlim([0, 388])

plt.ylabel('p("chaotic")')

plt.axvline(58, 0, 1, zorder=1, c='r', lw=1.5, ls='dashed', alpha=0.7)

plt.axvline(178, 0, 1, zorder=1, c='r', lw=1.5, ls='dashed', alpha=0.7);

Here, we find clear peaks indicating an increased probability for the chaotic scenario, i.e. that previously gained information about the market dynamics has become useless. Lets assume that we are concerned about market behavior as soon as there is at least a 1% risk that normal market dynamics can not describe the current price movement. This leaves us with three distinct events in the following time steps:

In [13]:

p = S.getTransitionModelProbabilities('chaotic', local=True)

np.argwhere(p > 0.01)

Out[13]:

array([[ 59],

[181],

[382]], dtype=int64)

These time steps translate to the following trading minutes: 10:31am, 12:33pm and 3:54pm.

The first peak at 10:31am directly follows the release of the Texas Manufacturing Outlook Survey of the Dallas Fed. The publication of this set of economic indicators has already been announced by financial news pages before the market opened. While the title of the survey (“Texas Manufacturing Activity Continues to Expand”) indicated good news, investors reacted sceptically, possibly due to negative readings in both the new orders index and growth rate of orders index (c.f. this article).

Note: This first peak would be more pronounced if we had supplied a prior distribution that constrains strong parameter fluctuations!

The underlying trigger for the second peak, shortly after 12:30pm, remains unknown. No major macroeconomic indicators were published at that time, at least according to some economic news sites (see e.g. nytimes.com or liveindex.org).

The last peak at 3:54pm is likely connected to the imminent market close at 4pm. To protect themselves from unexpected news after trading hours, market participants often close their open positions before market close, generally leading to an increased volatility. If large market participants follow this behavior, price corrections may no longer be explained by normal market dynamics.

This example has shown that bayesloop’s OnlineStudy class can

identify periods of increased volatility as well as periods of increased

price stability (accompanied by a negative correlation of subsequent

returns), and further automatically tunes its current assumptions about

market dynamics. Finally, we have demonstrated that bayesloop serves

as a viable tool to detect “anomalous” price corrections, triggered by

large market participants or news announcements, in real-time.